Summary

On 18 November 2025 a major Cloudflare outage made many well-known websites and apps slow or unreachable. Services such as ChatGPT, music platforms, and social networks were affected. The incident showed how a single third-party failure can ripple through many services. Below is a plain-language explanation of what happened, why it matters, what companies should do to reduce the risk, and what users can do to stay prepared.

What happened

Cloudflare helps sites load faster, block attacks, and direct traffic. An internal failure at Cloudflare degraded those functions, so sites that rely on it couldn’t serve pages, validate security, or route requests correctly. For many users, visiting affected sites returned error pages or nothing at all. The problem was in the shared service, not in the individual websites.

Why it matters

Many popular services depend on the same infrastructure. When a core provider fails, lots of otherwise independent services can go down at once. Outages damage trust, interrupt business and personal tasks, and expose when companies have put too much responsibility on a single outside vendor.

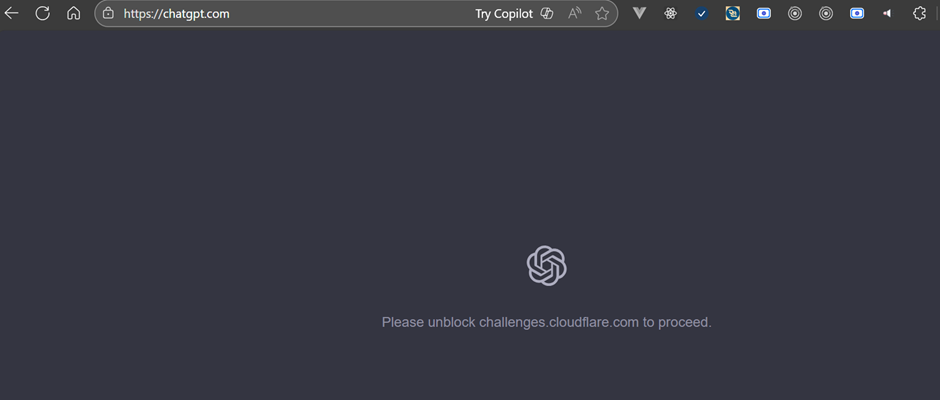

How this shows up for users

You might not be able to log in, load pages, or complete payments. Apps that rely on web services may freeze or return errors. In some cases services will switch to a read-only mode where you can view information but not make changes. Time-sensitive workflows and communications can be interrupted until systems are restored.

What companies should do (plain terms)

Companies reduce risk by assuming any external supplier can fail. Practical steps include adding a second provider for critical services, keeping recent content cached close to users, and offering a simple read-only mode so people can still access important information. Architect systems so a failure in one area doesn’t cascade into others, set sensible timeouts so systems don’t hang trying to reach a failing service, and run regular, safe simulations of outages to make sure backups actually work. Maintain consistent security settings across providers and keep clear contracts and escalation paths with vendors so fixes happen faster.

What users can do

Keep local copies of critical files and have at least one alternative tool or channel for urgent tasks. If a service announces a read-only mode, postpone nonessential actions and wait for the full restore. Watch official status updates from the affected service for accurate information.

Small checklist for teams

- Add a backup provider for critical services.

- Cache key content and prepare a read-only fallback.

- Practice outage drills and keep vendor runbooks ready.

Final thought

This outage was a reminder that shared infrastructure creates shared risk. Diversify critical dependencies, keep usable fallbacks, test failovers regularly, and communicate plainly with users. Those steps won’t stop every outage, but they will make them shorter, less disruptive, and easier for people to cope with.